Skype for HoloLens

Design Lead (2013 - 2016)

I was part of a team that designed–from concept to ship–a Microsoft first-party experience on the world’s first Mixed Reality computing device. Skype for HoloLens enables people to connect and interact in each others’ world, even when they are apart.

Invented several core interactions for HoloLens including natural hologram manipulation (Hand manipulation of objects and Hand Drawing) and the “Tag-a-long” menu system that were adopted platform wide

Rapidly prototyped in Unity and other mediums to test new interactions and experience flows

Developed many features including group calls, in call interactions, main menu UI flows, experience IA, and more

Patents

Mixed-Reality Image Capture US–9824499

Filtering Sounds for Conferencing Applications US–9530426

Contextual Cursor Display Based on Hand Tracking US–10409443

Augmented Reality Field of View Object Follower US–10740971

System and Method for Spawning Drawing Surfaces US–9898865

Natural Hand Interactions Deep Dive

Framing

Skype for HoloLens allowed for a remote communication and collaboration by supplying several tools user could use to interact in the world. This includes placing and manipulating photos, dropping pointers, and drawing.

All these tools worked well on the companion PC, but early in development the HoloLens hardware did not support hand tracking.

Problem

Due to the initial hardware limitations, the HoloLens user could only draw in the world with their head, a very unnatural process. My task was to explore how we could imbue our interactions with hand tracking for a more natural interaction.

Challenges

Many technical and interaction challenges existed that all needed to be addressed:

What is the mechanism for moving the cursor?

Where in the world is the line placed?

How does the user know when their hand is being tracked accurately?

What happens when the user turns their body while drawing?

etc.

Approach

Though we conducted a breadth of research, we were in completely uncharted territory. No other mixed reality headset existed and though some VR headsets existed, all drawing experiences took place at the x, y, z position of the controller, not projected into the world.

Starting with Hand drawing the first task was to establish our goals, test cases, and success criteria.

Enable users easily markup their space to communicate basic ideas visually

Speed of Conversation – If you can speak to it faster than the user can interact with holograms, the tool isn’t efficient enough

Established test cases based on current key demos and uses cases. In this case our basic drawings were focused on “Markup” shapes. Or shapes the would guide or modify existing objects in the world vs outright drawing for artistic purposes.

Iteration One

With the head as a starting point, draw a line through the hand to the drawing surface. As the user draws, keep the head as the anchor point.

We knew this likely would not work, but we knew we could implement it quickly and learn, so that’s what we did.

Result

Even small hand movements would end up with a large drawing on the surface.

Iteration Two

Explore moving the starting pivot point to different locations (Neck, Should, Elbow).

Result

Although mitigated issues from Iteration One, this path created other issues such as knowing the height and proportions of the user to place the starting point. The miss proportioned drawings also still existed.

Key Learning

When using an anchor point for the line traveling through the hand, the end drawing is always exaggerated.

Iteration Three

This was our first attempt removing the anchor point to the line.

Using the Head Gaze as the initial point on the surface, once the user Air Tapped (click gesture), a line was created from the user’s hand to the collision point on the wall.

Result

This was a massive success as the drawing moved relative to the hand in a more natural and controllable way.

Key Learning

Although easier to control, we found the precise nature didn’t match the user’s expectations. If they wanted to circle a photo on their wall, they would need to do a large circle with their hand at the same size as the real-world photo on the wall.

This led to the hand tracking being lost and to large tiring arm movements being necessary.

Because of this we added slight multipliers to the X and Y movement of the user’s hand.

Iteration Four

Most of our testing was done facing directly forward. Now we needed to make sure we could move our head and body while drawing and not have any side effects.

Result

With our implementation as the user turned their head, the line remained stable facing the wall.

This caused issues when the user turned as they may be drawing a long line across multiple walls in their space. In this case the line stayed pointed in the original direction.

Iteration Five

Here we tried rotating the line alongside the head rotation.

Result

This worked when the user turned their whole body - their head and hand together - but if the user ever looked away for a moment, they ended up drawing all over their wall unintentionally.

Key Learning

We had no way of knowing which way a user’s body was oriented, we just knew which way their head was facing. So, we couldn’t use their head in this direct way to rotate the vector of the drawing pointer but it was our only input for doing some-type of rotation factor, we just had to find the right implementation.

Iteration N

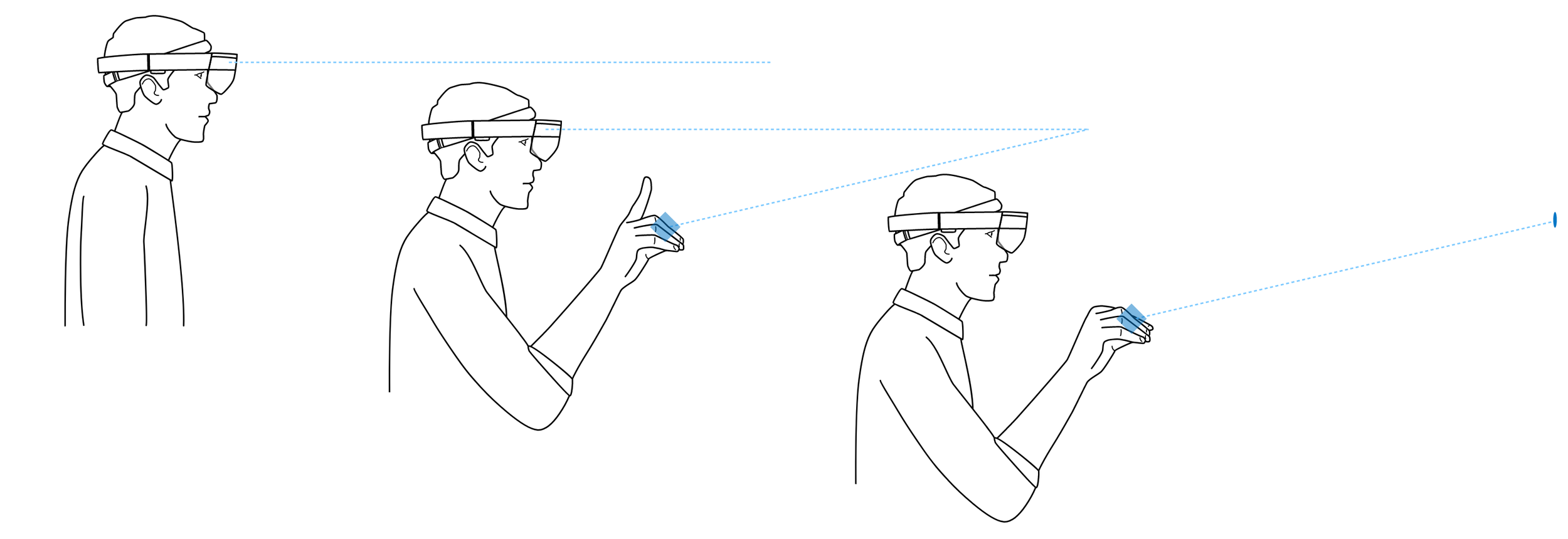

After trying many other explorations, we landed on creating a projection plane placed behind the user’s hand. This plane would only impact the rotation of the drawing by mapping to the user’s head rotation. The line to the wall would not start drawing until hand movement relative to the world was detected.

Result

This is effectively the end implementation of hand pathing interactions for Skype for HoloLens on the first generation of HoloLens. This system allowed for user to effectively markup their world while handling all edge cases such as different head and body movement around their space.

Impact

Ultimately the hand drawing felt so natural (At least relative to manipulating objects with your head) that all head interactions across the platform immediately felt outdated. We immediately propagated our hand tracking method to manipulating photos in the Skype experience and the platform team studied our implementation and adopted it as part of the core interaction system for HoloLens. At that point in time HoloLens was planned to ship without hand path tracking until our work.

Example Testing

Here is an example of one of the test cases during the development.

Twice a week different people were used for usability studies and went through a standardized set of actions with an objective ranking used to track the progress of the system.